I develop a system for tracking email “opens,” which allows the sender of an email message to know when their message is opened (read) by the recipient(s). The system comprises (1) a Chrome extension that adds functionality to the Gmail website, (2) a backend server that tracks the email sends and then the subsequent opens, and (3) an addon for the mobile (Android / iOS) Gmail apps.

Creating a SQLAlchemy Dialect for Airtable

In this post, I develop a SQLAlchemy Dialect for Airtable. This builds on my prior work building a Dialect for GraphQL APIs. With an Airtable Dialect, Apache Superset is able to use an Airtable Bases as a datasource. Pandas can load data directly from Airtable using its native SQL reader. The process of building the Dialect allowed me to better understand the Airtable API and data model, which will be helpful when building further services on top of Airtable. These services might include directly exposing the Airtable Base with GraphQL1 or UI builders backed by Airtable (like Retool). You can view the code for the Dialect here. It’s also pip installable as sqlalchemy-airtable.

Running Apache Superset Against a GraphQL API

In this blog post I walk through my journey of getting Apache Superset to connect to an arbitrary GraphQL API. You can view the code I wrote to accomplish this here. It’s also published to PyPI.

Pylearn2 MLPs With Custom Data

I decided to revisit the state map recognition problem, only this time, rather than using an SVM on the Hu moments, I used an MLP. This is not the same as using a “deep neural network” on the raw image pixels themselves as I am still using using domain specific knowledge to build my features (the Hu moments).

As of my writing this blog post, scikit-learn does not support MLPs (see this GSoC for plans to add this feature). Instead I turn to pylearn2, the machine learning library from the LISA lab. While pylearn2 is not as easy to use as scikit-learn, there are some great tutorials to get you started.

Map Recognition

The Challenge

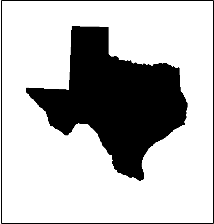

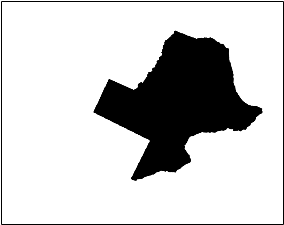

I wanted to write a program to identify a map of a US state.

To make life a little more challenging, this program had to work even if the maps are rotated and are no longer in the standard “North is up” orientation1. Further the map images may be off-center and rescaled:

Data Set

The first challenge was getting a set of 50 solid-filled maps, one for each of the states. Some Google searching around led to this page which has outlined images for each state map. Those images have not just the outline of the state, but also text with the name of the state, the website’s URL, a star showing the state capital and dots indicating what I assume are major cities. In order to standardize the images, I removed the text and filled in the outlines. The fill took care of the star and the dots.

Octopress Paper Cuts

Now that I have started writing a few blog posts, I have come across a few “paper cuts” with the (current) Octopress framework. Some quick background on Octopress: I am currently running the v2.0 version of Octopress which appears to have been released in July 2011. According to this blog, the v2.1 version was scrapped in favor of a more significant v3.0. From the Twitter feed and the GitHub page, v3.0 seems to be in active development but not yet released. That leaves me with using v2.0 for the time being.

@amesbah The current release is a bit neglected right now. The next version is being worked on quite a bit though. https://t.co/a3cogy8z2O

— Octopress (@octopress) May 15, 2014Brad Katsuyama’s Fleeting Liquidity: A Simple Analogy for What He Sees

From Michael Lewis’ The Wolf Hunters of Wall Street:

Before RBC acquired this supposed state-of-the-art electronic-trading firm, Katsuyama’s computers worked as he expected them to. Suddenly they didn’t. It used to be that when his trading screens showed 10,000 shares of Intel offered at $22 a share, it meant that he could buy 10,000 shares of Intel for $22 a share. He had only to push a button. By the spring of 2007, however, when he pushed the button to complete a trade, the offers would vanish. In his seven years as a trader, he had always been able to look at the screens on his desk and see the stock market. Now the market as it appeared on his screens was an illusion.

For someone making $1.5-million-a-year running RBC’s electronic-trading operation, I am surprised how little Brad understands about liquidity. I don’t just mean the complicated American equity markets; I mean liquidity in general. A simple analogy illustrates why the market is in fact not an illusion and how a similar experience can happen in other markets.

Hello World!

This is my inaugural, requisite post on my personal blog.

I have chosen to run my blog with Octopress rather than WordPress or Blogger. Why? This blogger and this blogger do a good job of explaining. Not only does Octopress allow me to source control the entire blog, but also lets me very easily host the site using GitHub Pages.