In this blog post I walk through my journey of getting Apache Superset to connect to an arbitrary GraphQL API. You can view the code I wrote to accomplish this here. It’s also published to PyPI.

I wanted a way for Superset to pull data from a GraphQL API so that I could run this powerful and well used business intelligence (BI) tool on top of the breadth of data sources that can be exposed through GraphQL APIs. Unfortunately Superset wants to connect directly with the underlying database (from its landing page: “Superset can connect to any SQL based datasource through SQLAlchemy”).

Generally this constraint isn’t that restrictive as data engineering best practices are to set up a centralized data warehouse to which various production databases would be synchronized. The database used for the data warehouse (e.g. Amazon Redshift, Google BigQuery) can then be queried directly by Superset.

The alternative, “lazy” approach is to eschew setting up this secondary data storage system and instead point the BI tool directly at the production database (or, slightly better, at a read replica of that database). This alternative approach is the route often chosen by small, fast moving, early-stage product engineering teams that either lack the resources or know-how to engage in proper data engineering. Or the engineers just need something “quick and dirty” to empower the business users to explore and analyze the data themselves and get building ad-hoc reports off the engineering team’s plate.

I found myself in in the latter scenario, where I wanted to quickly connect a BI tool to our systems without needing to set up a full data warehouse. There was a twist though. While our primary application’s database is Postgres (which is supported by Superset), we augment that data using various external / third-party APIs and expose all of this through a GraphQL API1. In some cases there are data transformations and business logic in the resolvers that I wanted to avoid having to re-implement in the BI tool.

And with that I set out to connect Superset (my BI tool of choice) directly to my GraphQL API. It turns out someone else also had this idea and had kindly filed a GitHub ticket back in 2018. The ticket unfortunately was closed with:

a) It’s possible to extend the built-in connectors. “The preferred way is to go through SQLAlchemy when possible.”

b) “All datasources ready for Superset consumption are single-table”.

While (b) could be an issue with GraphQL as GraphQL does allow arbitrary return shapes, if we confine ourselves to top level Lists or Connections and flatten related entities, the data looks “table-like”. (a) boiled down to writing a SQLAlchemy Dialect and associated Python DB-API database driver. A quick skim of both the SQLAlchemy docs and the PEP got me a little worried at the what I might be attempting to bite off.

Before I gave up hope, I decided to take a quick look at the other databases that Superset listed to see if there was one I could perhaps base my approach off of. Support for Google Sheets caught my attention and upon digging further, I saw it was based on a library called shillelagh, whose docs say “Shillelagh allows you to easily query non-SQL resources” and “New adapters are relatively easy to implement. There’s a step-by-step tutorial that explains how to create a new adapter to an API.” Intriguing. On the surface this was exactly what I was looking for.

I took a look at the step-by-step tutorial, which very cleanly walks you through the process of developing a new Adapter. From the docs: “Adapters are plugins that make it possible for Shillelagh to query APIs and other non-SQL resources.” Perfect!

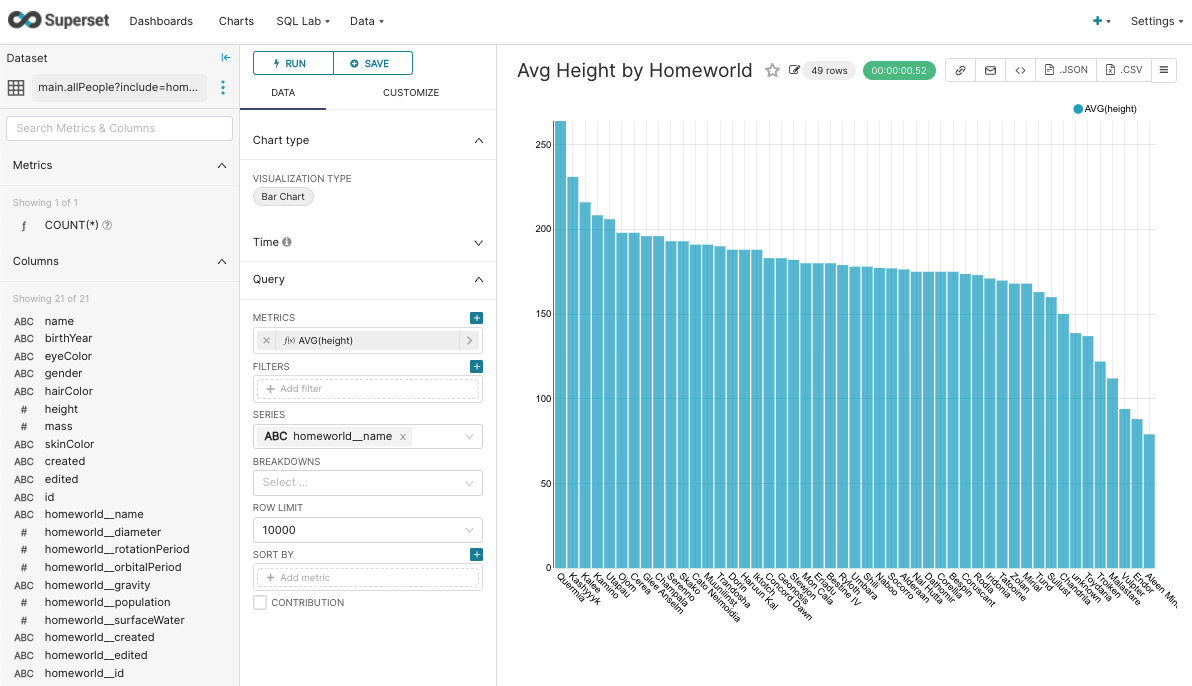

The last important detail that I needed to figure out what how to map the arbitrary return shape from the API to something tabular. In particular I wanted the ability to leverage the graph nature of GraphQL and pull in related entities. However the graph may be infinitely deep, so I can’t just crawl all related entities. I solved this problem by adding an “include” concept that specified what related entities should be loaded. this include is specified as a query parameter on the table name. In the case of SWAPI, I might want to query the allPeople connection. This would then create columns for all the scalar fields of the Nodes returned. If I wanted to include the fields from the species, the table name would be allPeople?include=species. This would add to the columns the fields on the linked Species. This path could be further traversed as allPeople?include=species,species__homeworld.

The majority of the logic is in the Adapter class and it roughly boils down to:

1. Taking the table name (exposed on the GraphQL API as a field on query) along with any path traversal instructions and resolving the set of “columns” (flattened fields) and their types. This leverages the introspection functionality of GraphQL APIs.

2. Generating the needed GraphQL query and then mapping the results to a row-like structure.

I also wrote my own subclass of APSWDialect, which while not documented in shillelagh’s tutorial was the approach taken for the gsheets:// Dialect. This comes with the benefit of being able to expose the list of tables supported by the Dialect. This class leverages the GraphQL.

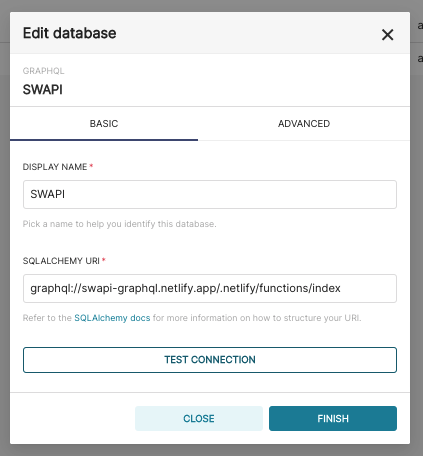

Once the graphql Dialect is registered with SQLAlchemy, adding the GraphQL API to Superset as a Database is as easy as creating a standard Database Url where the host and port (optional) are the host and port of the API and the database portion is the path: graphql://host(:port)/path/to/api2.

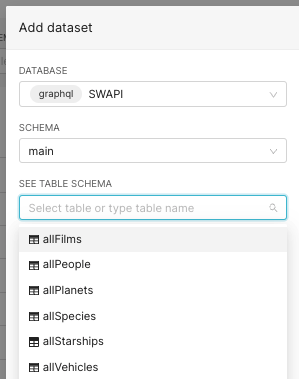

Once the Database is defined, the standard UI for defining Datasets can then be used, The list of “tables” is auto-populated through introspection of the GraphQL API3:

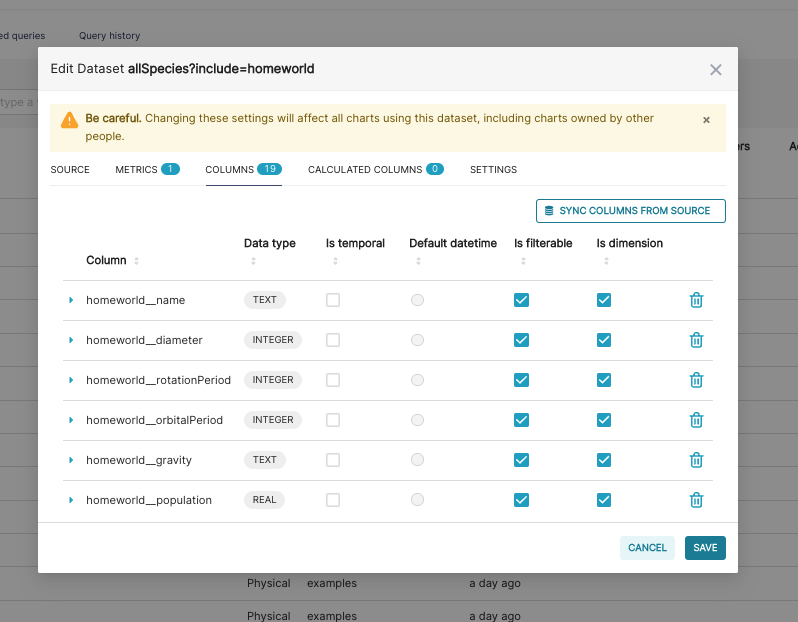

The columns names and their types can also be sync’ed from the Dataset:

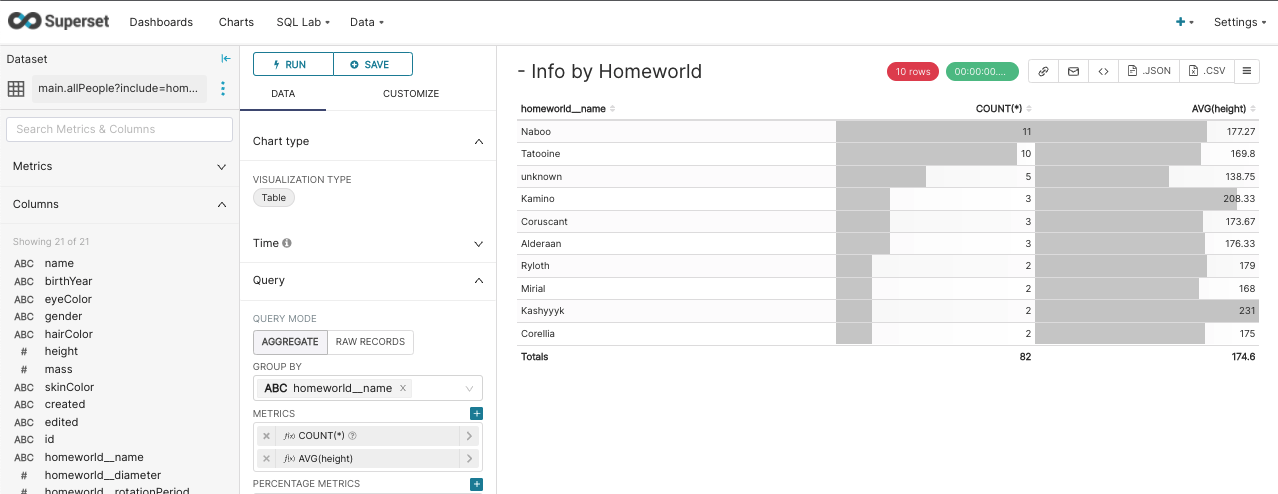

Once the Database and the Dataset are defined, the standard Superset tools like charting can be used:

and exploring:

Longer term there are a whole bunch of great benefits of using accessing data through a GraphQL API which this blog post explores further. GraphQL presents a centralized platform / solution for stitching together data from a number of internal (e.g. microservices) and external sources (SaaS tools + third-party APIs). It is also a convenient location to implement authorization and data redaction.

Appendix

Development Experience

I love to do early stage development work in a Jupyter Notebook with the autoreload extension (basically hot reloading for Python). However this presented a bit of a problem as both SQLAlchemy and shillelagh expected their respective Dialects and Adapters to be registered as entry points.

SQLAlchemy’s docs provided a way to do this In-Process:

1 2 3 | |

However shillelagh did not (feature now requested), but I was able to work around the issue:

1 2 3 4 5 6 | |

-

We use Redis caching + DataLoaders to mitigate the cost of accessing these APIs.↩

-

We do still need to specify the scheme (

http://vshttps://) to use when connecting to the GraphQL API. Options considered:

1. Two different Dialects (e.g.graphql://vsgraphqls://),

2. Different Drivers (e.g.graphql+http://vsgraphql+https://) or

3. Query parameter attached to the URL (e.g.graphql://host:port/path?is_https=0).

I went with (3) as most consistent with other URLs (e.g. for Postgres:?sslmode=require).↩ -

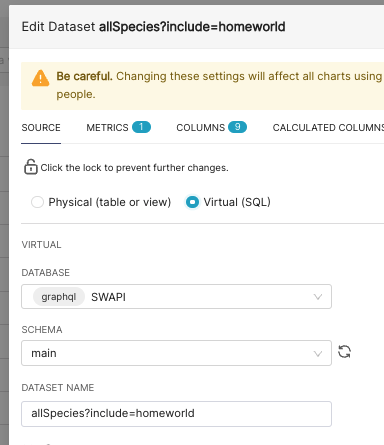

In order to set query params on the dataset name, I did have to toggle the

Virtual (SQL)radio button, which then let me edit the name as free text: ↩

↩