In this post, I develop a SQLAlchemy Dialect for Airtable. This builds on my prior work building a Dialect for GraphQL APIs. With an Airtable Dialect, Apache Superset is able to use an Airtable Bases as a datasource. Pandas can load data directly from Airtable using its native SQL reader. The process of building the Dialect allowed me to better understand the Airtable API and data model, which will be helpful when building further services on top of Airtable. These services might include directly exposing the Airtable Base with GraphQL1 or UI builders backed by Airtable (like Retool). You can view the code for the Dialect here. It’s also pip installable as sqlalchemy-airtable.

Airtable

Airtable is a no-code / low-code SaaS tool, which Wikipedia describes as:

a spreadsheet-database hybrid, with the features of a database but applied to a spreadsheet…Users can create a database, set up column types, add records, link tables to one another

Airtable empowers non-technical (or lazy, technical) users to rapidly build various CRUD applications such as CRMs, project trackers, product catalogs, etc. The Airtable team has done a great job publishing a collection of templates to help users get started. Airtable offers a lot of of great UI elements for CRUD operations that include a very powerful grid view, detail (record-level) views, and “forms”, which are great for data entry. There are a whole bunch more view types documented here, including Gantt, Kanban, and calendar.

Data Visualization and Data Analysis

That being said, performing data visualization and data analysis is somewhat limited within Airtable itself. Over time, the Airtable team has rolled out additional functionality from the summary bar, which offers column-by-column summary stats, to apps (fka blocks), such as the pivot table to now more comprehensive data visualization apps. Even with the app / dashboard concept and the associated marketplace, there is still a gap in what an experienced business or data analyst might be looking for and what is offered. Further. Airtable might be just one part of a larger data storage system used at with the organization such that even if a whole bunch more functionality were added, there would still be desire to perform analysis elsewhere. For example, while you might start by building a v1 of your CRM and ticketing systems in Airtable, over time you may find yourself migrating certain systems to purpose-built tools such as Salesforce or Zendesk. Ideally visualizations and analysis can be performed in one place that isn’t vertically integrated with the data solution (not to mention in general avoiding vendor lock-in).

Recognizing the desire of its users to use existing BI tools, the Airtable team has written a helpful blog piece, “How to connect Airtable to Tableau, Google Data Studio, and Power BI”. The prebuilt integrators discussed in the post are helpful if you use one of those BI tools and, in the case of Tableau and Data Studio, actually require an expensive, enterprise Airtable account.

Railsware built Coupler.io (fka Airtable Importer) to make synchronizing data from Airtable into Google Sheets easy. This opens up using Google Sheets as a BI tool or simple data warehouse. There are a few other tools that attempt to synchronize from Airtable to traditional databases including Sequin (for CRM data) and CData and others like BaseQL that expose Airtable with a GraphQL API.

I do some of my analysis in Pandas and some in Superset and I wanted a way to connect either of these to Airtable. I am also “lazy” and looking for a solution that doesn’t involved setting up a data warehouse and then running an ETL / synchronization tool.

Implementation

I figured I could achieve my objective by building a Shillelagh Adapter which would in effect create a SQLAlchemy Dialect exposing Airtable as a database and allow any Python tool leveraging SQLAlchemy or expecting a DB-API driver to connect.

Fortunately Airtable offers a rich RESTful API for both querying data as well as updating and inserting data. There are a number of client libraries for different languages wrapping this API. The library for JavaScript is “official” and is maintained by Airtable itself. There is a well-maintained, unofficial Python library with blocking I/O and a less well maintained library for Python based on asyncio (non-blocking). For the purposes of building the DB Dialect, I stick with blocking I/O as that library is better maintained and the underlying libraries used (Shillelagh –> APSW), won’t be able to take advantage of non-blocking I/O.

Metadata

In order to expose an Airtable Base as a database we need to know the schema. The “schema” in practice is the table names and for each table, the list of column names and for each column its data types. Fortunately Airtable offers a Metadata API that provides this info. Unfortunately, the feature is currently invite only / wait-listed. I applied but have not been lucky enough to be accepted. I document a number of different workarounds for obtaining the metadata here. They roughly boil town to a) hardcoding the table list + pulling down some sample data from each table and then guessing the field types, b) allowing the user to pass in a schema definition (can be offline scraped from the API docs page), or c) hitting an endpoint that exposes the metadata (e.g. shared view or base). Option a) is the most seamless / low-friction to the user whereas c) is the most reliable. I ended up starting with option a) but offering partial support for option c).

There are a few gotchas with the guessing approach, the most annoying of which is that the Airtable API entirely omits fields within a record if the value is null or “empty”. This is particularly problematic when peeking at the first N rows in cases in which new fields were added later on. Currently there is no easy way to pull the last N rows. Other fields may be polymorphic, such as those coming from a formula. We err on the side of being conservative, for example assuming that even if we see only integers the field may at some point contain a float.

Leveraging the API

The Airtable “backing store” supports sorting, filtering, and page size limits2. Shillelagh by way of APSW by way of SQLite virtual tables is able to opt-in to these features to reduce the amount of work that has to be done in memory by SQLite and instead push the sorting and filtering work to the backing store. We just need to translate from what Shillelagh gives us to what the API expects.

Sorting is relatively easy as it just involves passing the the field names and the desired sort order to the API. Translating filters involves building an Airtable formula that evaluates as true for the rows we want to return. This was pretty straight forward except for 1) when dealing with IS NULL / IS NOT NULL filters and 2) filtering by ID or createdTime (these two values are returned on every record). Writing a filter to select for nulls was tricky in that Airtable’s concept of null is BLANK() which is also equal to the number 0. Filters for ID and createdTime are able to leverage built-in functions of RECORD_ID() and CREATED_TIME().

Value Encoding Gotchas

Once a given column’s data type is known, decoding is generally pretty straightforward. The gotchas were: parsing relationships (Link records and Lookups), dealing with floats, and dealing with formulas. Relationships are always represented in the API as arrays, regardless of whether “allow linking to multiple records” is checked. In these cases, the array value needs to be flattened for support in SQLite / APSW. Floats are generally encoded according to the JSON Spec; however, JSON does not support “special” values like Infinity of NaN. Rather than sending a string value for these, the Airtable API returns a JSON object with a key of specialValue with a corresponding value. Errors resulting from formulas are likewise encoded as an object with a key of error and a corresponding error code (such as #ERROR).

Database Engine Specs (Superset)

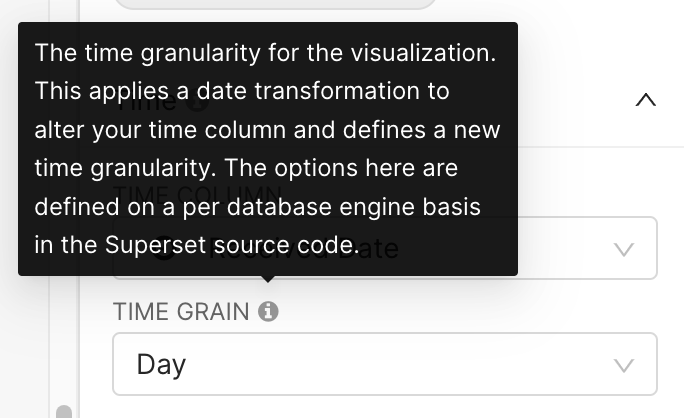

A “database engine spec” is required in order to fully utilize Superset when connected to a new datasource type (i.e. our Dialect). Basically the spec tells Superset what features the DB supports. The spec is required for any sort of time series work that requires time-binning. There is a pretty confusing message about “Grain” and how the options are defined in “source code”:

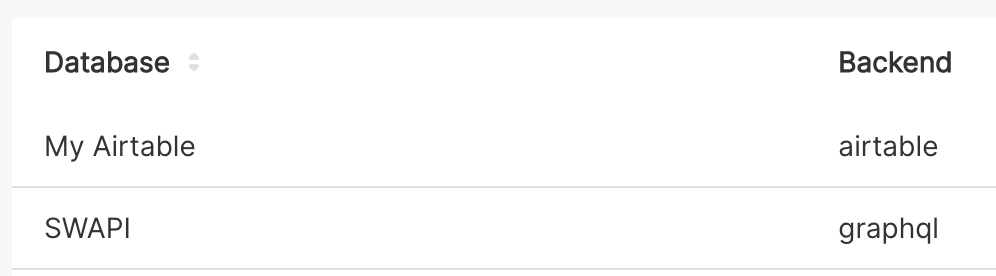

Ultimately I found this blog post from Preset and this implementation for the GSheets datasource, which I based mine on. You do also have to register the spec using an entry_point. Once I added this Spec, I was then offered a full set of time grains to choose from. Superset does also now call out the datasource’s “backend”:

I am not currently taking advantage of the UI / parameters (it is all pretty undocumented), but from what I see it looks as if I can tweak the UI to gather specific values from the user.

JOINing across Tables

With our Airtable Tables now accessible in SQLAlchemy as database tables, I figured I would try using SQL to perform a JOIN between two Tables in the Base. The JOIN worked, in that the data returned was correct and as expected; however, the performance was terrible and I observed far more calls to the Airtable API than expected and filters were not being passed in. For example if I were joining tables A and B on B.a_id = A.id, I would we see get_data for A called once (with no filter) and then get_data for B called n times (with no filters passed in) where n is the number of rows in A.

I thought this might be due to SQLite’s use of a sequential scans rather than an index scan and tried writing a get_cost function to provide additional hints to the query planner. While this does cause SQLite to issues filtered queries to the API, cutting down on the amount of data fetched, it does not reduce the number of queries sent to the API. With a get_data function, I see a single call to get_data on A and then n called to get_data for B, but I now see ID= filters being passed in on each call. Using EXPLAIN QUERY PLAN, I see:

1 2 | |

whereas without a get_cost:

1 2 | |

Unfortunately the issue ultimately is that SQLite does not try to hold the tables in memory during a query and instead relies on the Virtual table implementation to do so. Without any caching in our Adapter, this means that our get_data methods is called n times on one of the two tables.

I opened this discussion on the SQLite forum where the tentative conclusion is to add a SQLITE_INDEX_SCAN_MATERIALIZED to tell SQLite to materialize the whole virtual table (keyed on the used constraints).3

-

For example building a “Source Handler” for GraphQL Mesh for Airtable or a clone of BaseQL.↩

-

While the API supports a

LIMIT(page size) concept, it uses cursor-based pagination so specifyingOFFSETis not possible.↩ -

There are some other hints to SQLite that might be important for query optimization such as

SQLITE_INDEX_SCAN_UNIQUE:the idxFlags field may be set to SQLITE_INDEX_SCAN_UNIQUE to indicate that the virtual table will return only zero or one rows given the input constraints.

However this requires some changes to APSW. Additionally indicating the estimated number of rows a query will return, which also requires a change to APSW.↩